You may noticed that I am trying to visit computer museums when there is a chance. Recently I visited Apple Museum Poland in small village near Warsaw, Poland.

Museum is open during weekends and visits need to be arranged earlier (as it is in private house). It is easy to get there (Google Maps or other navigation) and totally worth it. Never mind are you an Apple fan or not.

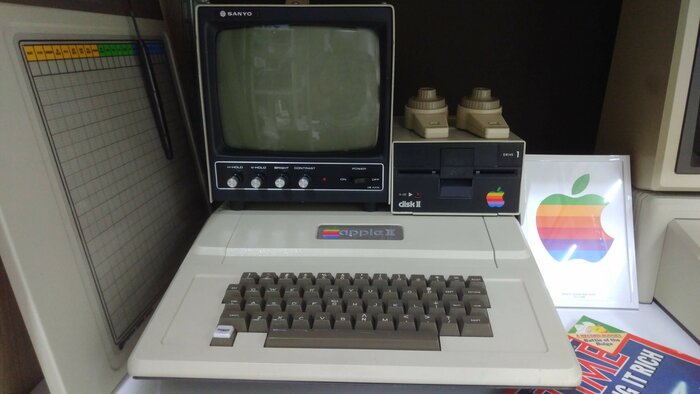

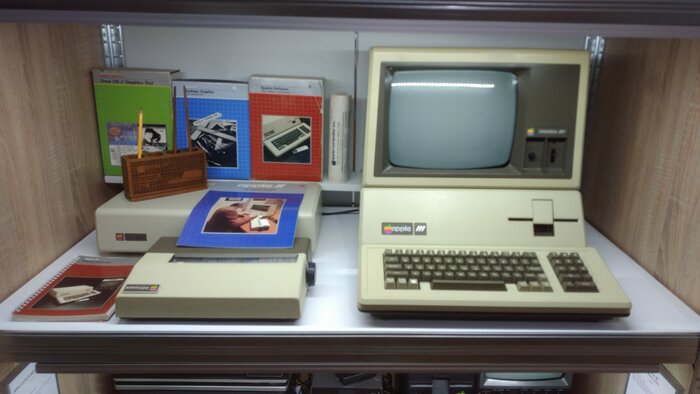

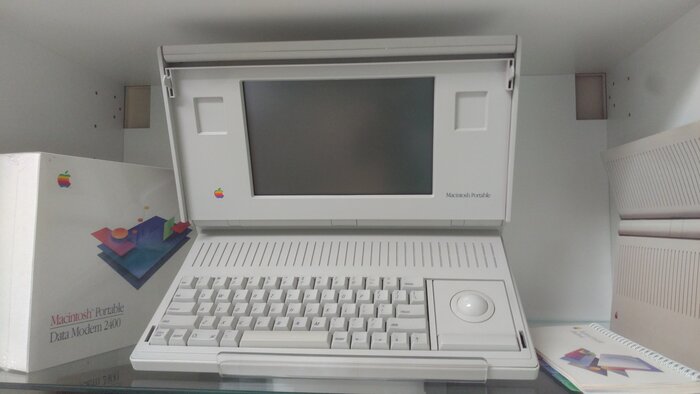

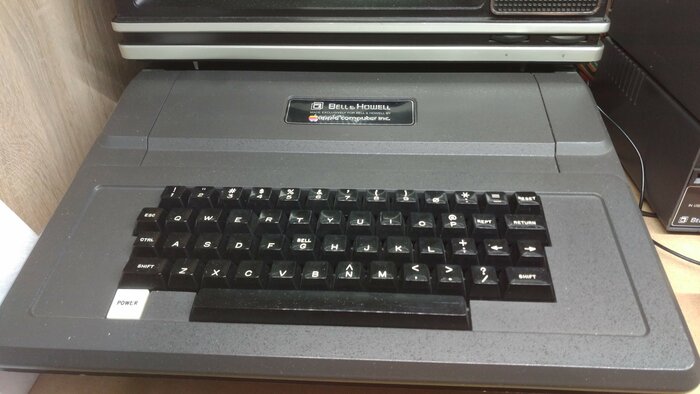

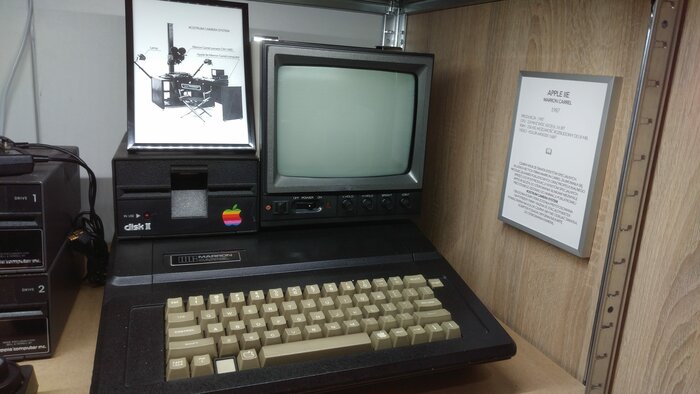

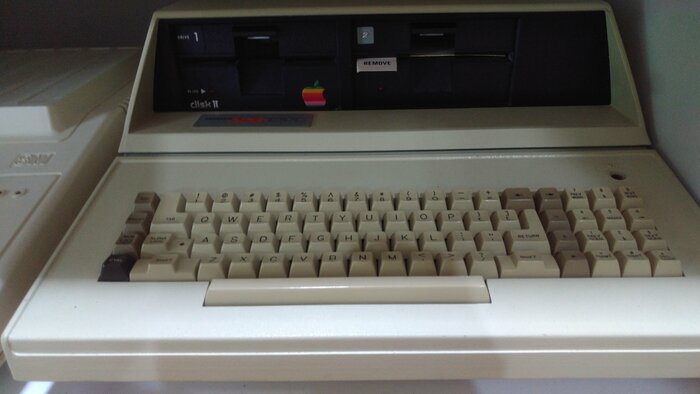

What’s there? Apple computers from replica of Apple I, through misc Apple II/III models to Lisa, Macintosh machines, Powerbooks, iMacs etc. Some clones too. Some old terminals. Apollo Computer Graphic Workstation. And that’s not all.

There is a lot of attention given to details. Same monitors as in original commercials. Same setups.

As ARM developer I could not notice that there was a shelf filled with first ARM powered Apple devices: Newton in several models.

Computers… What about servers? How many people remember that Apple was doing servers? Big, loud machines.

Of course like each museum that one also have some pearls:

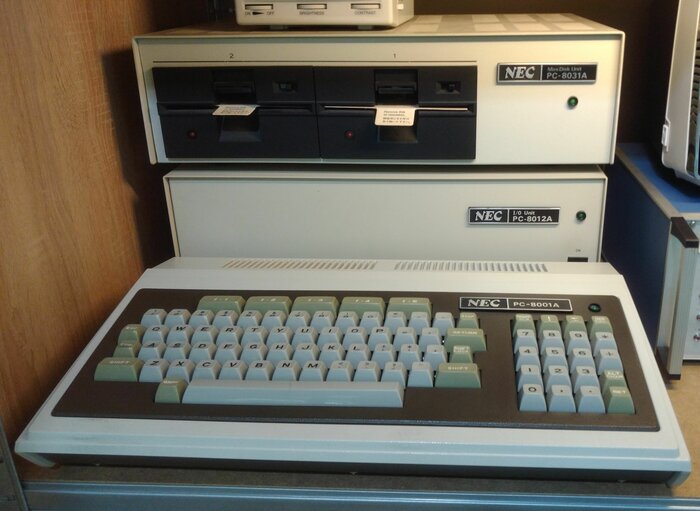

There were also several non-Apple machines there. From that Apollo Computer Graphic Workstation to Franklin ACE 1200 (and some other Apple II clone). Also some industrial solutions.

There were several other computers, accessories and peripherals exhibited. Lot of interesting stories given by museum owner. Incredible amount of stuff not available outside of Apple dealers network (like official video instructions on laserdiscs).

I could add more and more photos here but trust me — it is far better to see it with own eyes than through blog post.

Again I highly recommend it to anyone. Never mind are you an Apple fan or just like old computers. Just remember to go to Apple Museum Poland on Facebook first to arrange a visit.