During last few months I was working on getting Kolla running on AArch64 and POWER architectures. It was a long journey with several bumps but finally ended.

When I started in January I had no idea how much work it will be and how it will go. Just followed my typical “give me something to build and I will build it” style. You can find some background information in previous post about my work on Kolla.

A lot of failures were present at beginning. Or rather: there was a small amount of images which built. So my first merged change was to do something with Kolla output ;D

- build: sort list of built/failed images before printing

Debian support was marked for removal so I first checked how it looked, then enabled all possible images and migrated from ‘jessie’ to ‘stretch’ release. Reason was simple: ‘jessie’ (even with backports) lacked packages required to build some images.

- debian: import key for download.ceph.com repository

- debian: install gnupg and dirmngr needed for apt-key

- debian: enable all images enabled for Ubuntu

- handle rtslib(-fb) package names and dependencies

- debian: move to stretch

- Debian 8 was not released yet

Both YUM and APT package managers got some changes. For first one I took care to make sure that it fails if there were missing packages (which was very often during builds for aarch64/ppc64le). It allowed to catch some typo in ‘ironic-conductor’ image. In other words: I make YUM behave closer to APT (which always complain about missing packages). Then I made change for APT to behave more like YUM by making sure that update of packages lists was done before packages were installed.

- ironic-conductor: add missing comma for centos/source build

- make yum fail on missing packages

- always update APT lists when install packages

Of course many images could not be built at all for aarch64/ppc64le architectures. Mostly due to lack of packages and/or external repositories. For each case I was checking is there some way for fixing it. Sometimes I had to disable image, sometimes update packages to newer version. There were also discussions with maintainers of external repositories on getting their stuff available for non-x86 architectures.

- kubernetes: disable for architectures other than x86-64

- gnocchi-base: add some devel packages for non-x86

- ironic-pxe: handle non-x86 architectures

- openstack-base: Percona-Server is x86-64 only

- mariadb: handle lack of external repos on non x86

- grafana: disable for non-x86

- helm-repository: update to v2.3.0

- helm-repository: make it work on non-x86

- kubetoolbox: mark as x86-64 only

- magnum-conductor: mark as x86-64 only

- nova-libvirt: handle ppc64le

- ceph: take care of ceph-fuse package availability

- handle mariadb for aarch64/ubuntu/source

- opendaylight: get it working on CentOS/non-x86

- kolla-toolbox: use proper mariadb packages on CentOS/non-x86

At some moment I had over ten patches in review and all of them depended on the base one. So with any change I had to refresh whole series and reviewers had to review again… Painful it was. So I decided to split out the most basic stuff to get whole patch set split into separate ones. After “base_arch” variable was merged life became much simpler for reviewers and a bit more complicated for me as from now on each patch was kept in separate git branch.

- add base_arch variable for future non-x86 work

At Linaro we support CentOS and Debian. Kolla supports CentOS/RHEL/OracleLinux, Debian and Ubuntu. I was not making builds with RHEL nor OracleLinux but had to make sure that Ubuntu ones work too. There was funny moment when I realised that everyone using Kolla/master was building images with Ocata packages instead of Pike ;D

- Ubuntu: use Pike repository

But all those patches meant “nothing” without first one. Kolla had information about which packages are available for aarch64/ppc64le/x86-64 architectures but still had no idea that aarch64 or ppc64le exist. Finally the 50th revision of patch got merged so it now knows ;D

- Support non-x86 architectures (aarch64, ppc64le)

I also learnt a lot about Gerrit and code reviews. OpenStack community members were very helpful with their comments and suggestions. We had hours of talk on #openstack-kolla IRC channel. Thanks goes to Alicja, Duong Ha-Quang, Jeffrey, Kurt, Mauricio, Michał, Qin Wang, Steven, Surya Prakash, Eduardo, Paul, Sajauddin and many others. You people rock!

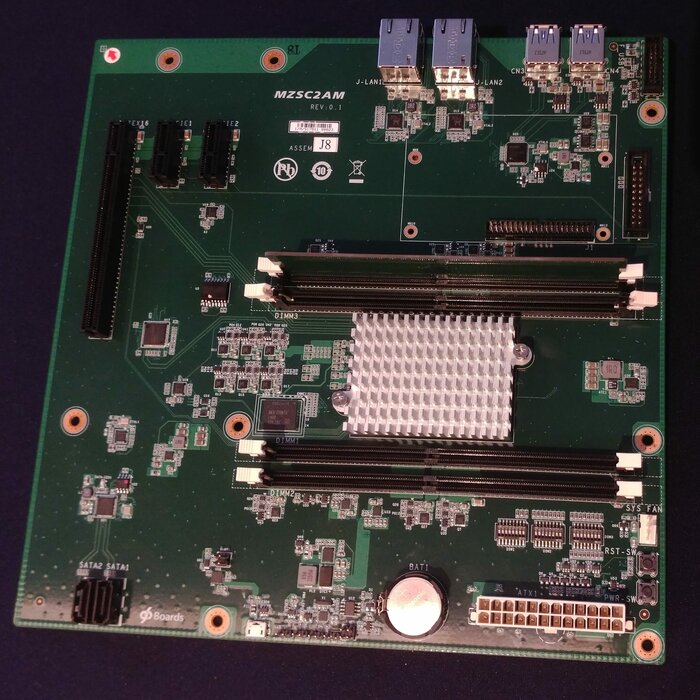

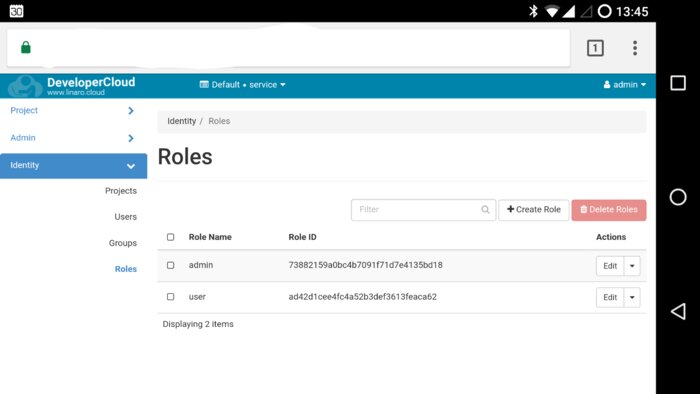

So is my work on Kolla done now? Some of it is. But we need to test resulting images, make a Docker repository with them, update official documentation with information how to deploy on aarch64 boxes (hope that there will be no changes needed). Also need to make sure that OpenStack Kolla CI gets Debian based gates operational and provide them with 3rdparty AArch64 based CI so new changes could be checked.