In 1996 I started studies at Białystok University of Technology. And one of early days I found that corridor with text terminals. Some time later I joined that crowd and started using HP-2623A term with SunOS account.

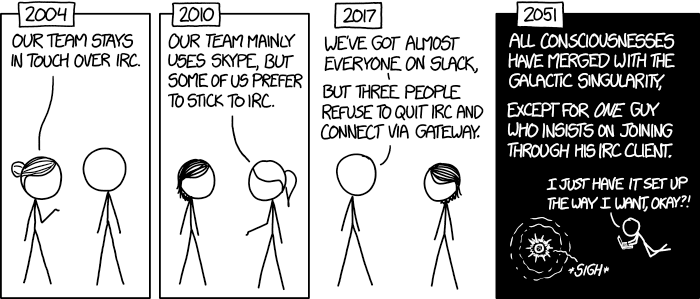

And one of first applications crowd shown me was ircII (other were bash, screen, pine and ncftp). So I am IRC user for over 24 years now and this XKCD comics can be about me:

Clients

As wrote above I started with ircII. It was painful to use so quickly some scripts landed — Venom, Lice and some others. Tried Epic, Epic2000 and some other clients to finally end with Irssi. I have never been a fan of GUI based ones.

There were moments when people used CTCP VERSION to check what clients other users use. In old Amiga days I usually had it set to similar one as AmIRC one but with version bumped above whatever was released. Simple trolling for all those curious people. Nowadays it simply replies with “telnet” and there was a day when I logged to IRC server and exchanged some messages using just telnet :D

Networks

For years I was user of IRCnet. It was popular in Poland so why bother with checking other networks. But as time passed and I became more involved in FOSS projects there was a need to start using Freenode, then OFTC, Mozilla etc.

Checked how old my accounts are as it nicely show when I started using which network.

IRCnet

IRCnet was my first IRC network. Stopped using it few years ago as all channels I was on went quiet or migrated elsewhere (mostly Freenode).

For years I was visible on Amiga channels #amisia, #amigapl where I met several friends and I am in contact with many of them still.

Freenode

The journey started on 15th May 2004. This was time when I started playing with OpenEmbedded and knew that this is a project where I will spend some of my free time (it became hobby, then job).

It was a place where CentOS, Fedora, Linaro, OpenStack and several other projects were present.

NickServ- Information on Hrw (account hrw):

NickServ- Registered : Mar 15 10:59:47 2004 (17y 9w 6d ago)

NickServ- Last addr : ~hrw@redhat/hrw

NickServ- vHost : redhat/hrw

NickServ- Last seen : now

NickServ- Flags : HideMail

NickServ- *** End of Info ***

OFTC

For me OFTC means Debian. Later also virtualization stuff as QEMU and libvirt folks sit there.

NickServ- Nickname information for hrw (Marcin Juszkiewicz)

NickServ- hrw is currently online

NickServ- Time registered: Fri 10 Jun 2011 17:43:55 +0000 (9y 11m 10d 14:47:05 ago)

NickServ- Account last quit: Tue 18 May 2021 09:07:55 +0000 (1d 23:23:05 ago)

NickServ- Last quit message: Remote host closed the connection

NickServ- Last host: 00019652.user.oftc.net

NickServ- URL: http://marcin.juszkiewicz.com.pl/

NickServ- Cloak string: Not set

NickServ- Language: English (0)

NickServ- ENFORCE: ON

NickServ- SECURE: OFF

NickServ- PRIVATE: ON

NickServ- CLOAK: ON

NickServ- VERIFIED: YES

Libera

And since yesterday I am on Libera as well.

NickServ- Information on hrw (account hrw):

NickServ- Registered : May 19 15:26:18 2021 +0000 (17h 32m 35s ago)

NickServ- Last seen : now

NickServ- Flags : HideMail, Private

NickServ- *** End of Info ***

What next?

I saw and used several instant messaging platforms. All of them were younger than IRC. Many of them are no longer popular, several are no longer existing. IRC survived so I continue to use it.